Prepare imageclipper tool:

1. Download imageclipper (link) via git:

https://github.com/JoakimSoderberg/imageclipper

2. Install Boost and OpenCV. A prebuilt boost is available at http://boost.teeks99.com/ (use correct version for corresponding platform)

3. Build imageclipper from source (CMake is required) by following the instructions here.

4. Build/Compile Project (Visual Studio for Windows)

Modify the code for square bounding box purpose:

1. Locate line 619 in the imageclipper.cpp

2. Comment line 619 and add one line:

// param->rect.height = abs( point0.y - y );

param->rect.height = param->rect.width;

Therefore height is always equal to width. Rebuild project after modification

Run imageclipper.exe:

1. Follow instructions explained the command usage: link

2. Create output image format (-i for video input, -v for video input):

For example, this for a video input

%d/imageclipper/%i_%f_%04x_%04y_%04w_%04h.png

will create filename like:

video_1_0276_0134_0055_0055.png

Create a txt file for crop region:

See instructions here.

The cropping GUI should looks like this:

The region on the tool I would like to crop include:

iDot, IS_Logo, Wheel_Pin, Wheel

Please refer to Fig.4 in this paper for reference: Appearance learning for 3D tracking of robotic surgical tools

Some examples for cropped samples:

Wednesday, 17 December 2014

Thursday, 20 November 2014

Hand-eye calibration for DVRK

To validate the hand-eye calibration:

Handeye problem as AX=XB:

To validate handeye by backprojecting to image

Monday, 9 June 2014

Hand-eye calibration

Install MATLAB calibration toolbox: link

Install addon for the hand-eye calibration: link

Note:

In order to use normal calibration pattern (instead of the dots pattern provided by the hand-eye program), we need to do: "active_images = 1:num_of_views;" before calling "handeye.m".

Step 1: Record stable frames number in both left and right video, save it in a txt file.

Step 2: Copy/Paste the frames to the calibration folder, rename them from XXX_0000 to XXX_0020 (for example).

Step 3: Run calib.m (MATLAB calibration toolbox). Go through the normal camera calibration procedure.

Step 4: For the hand-eye calibration, import tracking data of marker that was attached to the camera (or robot kinematic data).

Step 5: Smooth the tracking data for both position and quaternion based on the frame number recorded in the Step 1.

Step 6: Run handeye.m.

Install addon for the hand-eye calibration: link

Note:

In order to use normal calibration pattern (instead of the dots pattern provided by the hand-eye program), we need to do: "active_images = 1:num_of_views;" before calling "handeye.m".

Step 1: Record stable frames number in both left and right video, save it in a txt file.

Step 2: Copy/Paste the frames to the calibration folder, rename them from XXX_0000 to XXX_0020 (for example).

Step 3: Run calib.m (MATLAB calibration toolbox). Go through the normal camera calibration procedure.

Step 4: For the hand-eye calibration, import tracking data of marker that was attached to the camera (or robot kinematic data).

Step 5: Smooth the tracking data for both position and quaternion based on the frame number recorded in the Step 1.

Step 6: Run handeye.m.

Wednesday, 14 May 2014

OpenCV save cv::Mat in double/float precision

In order to read the OpenCV matrix in double/float precision in MATLAB, we can save the matrix using:

Above code save the matrix into a csv (comma-separated values) file which can be imported by MATLAB easily using 'load' function.

Reference:

Saving matrix in double precision from OpenCV (C++) for Matlab

std::ofstream myfile;

myfile.open("output.csv");

myfile << format(outMat,"csv") << std::endl << std::endl;

myfile.close();

Above code save the matrix into a csv (comma-separated values) file which can be imported by MATLAB easily using 'load' function.

Reference:

Saving matrix in double precision from OpenCV (C++) for Matlab

Labels:

OpenCV

Tuesday, 6 May 2014

PatchMatch Multiscale implemantation

In this post I record

the multi-scale implementation of the PatchMatch Stereo algorithm.

The aim is to create a

pyramid to process the algorithm in different scale so that speed and accuracy

may be improved.

·

Start from the smallest

scale and for each scale:

o Downsampling the original size image to

current scale

o Initialize a new object of the CostFunction

class (including image border enlarge and grad image calculation)

o For

each pixel in the current scale image:

§ Spatial

propagation: calculate the cost for the neighbors and use the plane that gives

minimal cost

§ View propagation: replace current plane if the

plane of the corresponding pixel in the other view gives smaller cost

§ Plane refinement

§ All above procedures are processed in order (Image A: top-left to bottom right; Image B: top-left to bottom right; Image A: bottom right to top-left; Image B: bottom right to top-left)

§ All above procedures are processed in order (Image A: top-left to bottom right; Image B: top-left to bottom right; Image A: bottom right to top-left; Image B: bottom right to top-left)

·

Post-processing

Indexing the Patch Image after downsample:

For each patch in a patch image, it is indexed based on its (x, y) coordinate and width of the image.

Patch_Img_A[y * _imgSize.width + x]

However, if we want to retrieve the corresponding patch in coarser image, we need to do:

int yy = y / 2, xx = x / 2; Patch_Img_Pre_A[yy * currLImage.cols / 2 + xx];

Negative disparity value:

Although the plane parameters can result in negative disparity, I assume all the disparity are non-negative in my program. If a too large (> maxDisparity) or too small (< 0) disparity is calculated, I replace it with maxDisparity or absolute value respectively. It does not make much sense in terms of the disparity is the pixel difference but it keeps the program simpler.

Time measurement with OpenCV:

double t = (double)getTickCount(); // do something ... t = ((double)getTickCount() - t)/getTickFrequency(); cout << "Times passed in seconds: " << t << endl;

Patch Image from small scale to large scale:

A finer scale patch image is produced by coping corresponding plane parameters (i.e. a, b and c) in coarser scale. Better interpolation strategy should be explored (e.g. bilinear/ bi-cubic)

Fast converging:

It may not be necessary to iterate at a patch that is nearly converged. One idea is detect converged patches at coarser scale which is fast and not update these patches in finer scale.

Runtime (in second):

Input image dimension: 360x288

Layers: 3

Windows: 35x35 (finest layer)

1st (coarsest): 0.364 - 0.350 - 0.363 - 0.350

2nd: 5.744 - 5.500 - 5.743 - 5.503

3rd (finest): 108.073 - 103.807 - 109.202 - 103.938

Reference:

Thursday, 1 May 2014

Belief propagation for stereo tutorial

1. Loopy belief propagation, Markov Random Field, stereo vision

A very nice and detailed tutorial about using belief propagation and MRF to solve the stereo problem. Some maths have been presented clearly and simply. A self-contained belief propagation code for stereo is attached (main.cpp ).

2. Stereovision reconstruction system

A project implementing paper: "Segment-Based Stereo Matching Using Belief Propagation and a Self-Adapting Dissimilarity Measure (ICPR 2006)" using OpenCV.

Loopy Belief Propagation code:

A MRF is a grid of node with same size as the image. For each node in the MRF, 5 link is construct to its neighbour and each link contains 'number of label' cells for storing massage.

For every input image pair, we need to initialize the MRF correspondingly. For all the message at the very beginning stage, all message are initialized to 0 (or 1 depends on optimization) which means each node does not have any opinion about its neighbors. For the messages of each node itself, we initialize them based on the DataCost function. The DataCost function can be as simple as the intensity difference.

For propagating the message at each node to different direction (LEFT/RIGHT/UP/DOWN), 'SendMsg' function iterate every possible labels l for the corresponding link (each link contains multiple messages). For each label,we calculate the minimum cost/energy (Min-Sum BP) out of all possible labels l'. This minimum cost/energy is recorded for that label l of the link. Then use this minimum cost to update the message of the neighbor node so that link A-B and B-A has same message now.

At last, we assign each node (pixel) with the label that minimize the global energy.

Main program which calls above function:

A very nice and detailed tutorial about using belief propagation and MRF to solve the stereo problem. Some maths have been presented clearly and simply. A self-contained belief propagation code for stereo is attached (main.cpp ).

2. Stereovision reconstruction system

A project implementing paper: "Segment-Based Stereo Matching Using Belief Propagation and a Self-Adapting Dissimilarity Measure (ICPR 2006)" using OpenCV.

Loopy Belief Propagation code:

A MRF is a grid of node with same size as the image. For each node in the MRF, 5 link is construct to its neighbour and each link contains 'number of label' cells for storing massage.

struct Pixel

{

// Each pixel has 5 'message box' to store incoming data

TYPE msg[5][LABELS];

int best_assignment;

};

struct MRF2D

{

std::vector grid;

int width, height;

};

For every input image pair, we need to initialize the MRF correspondingly. For all the message at the very beginning stage, all message are initialized to 0 (or 1 depends on optimization) which means each node does not have any opinion about its neighbors. For the messages of each node itself, we initialize them based on the DataCost function. The DataCost function can be as simple as the intensity difference.

void InitDataCost(const std::string &left_file, const std::string &right_file, MRF2D &mrf)

{

// Cache the data cost results so we don't have to recompute it every time

// Force greyscale

cv::Mat left = cv::imread(left_file.c_str(), 0);

cv::Mat right = cv::imread(right_file.c_str(), 0);

if(!left.data) {

cerr << "Error reading left image" << endl;

exit(1);

}

if(!right.data) {

cerr << "Error reading right image" << endl;

exit(1);

}

assert(left.channels() == 1);

mrf.width = left.cols;

mrf.height = left.rows;

int total = mrf.width*mrf.height;

mrf.grid.resize(total);

// Initialise all messages to zero

for(int i=0; i < total; i++) {

for(int j=0; j < 5; j++) {

for(int k=0; k < LABELS; k++) {

mrf.grid[i].msg[j][k] = 0;

}

}

}

// Add a border around the image

int border = LABELS;

for(int y=border; y < mrf.height-border; y++) {

for(int x=border; x < mrf.width-border; x++) {

for(int i=0; i < LABELS; i++) {

mrf.grid[y*left.cols+x].msg[DATA][i] = DataCostStereo(left, right, x, y, i);

}

}

}

}

For propagating the message at each node to different direction (LEFT/RIGHT/UP/DOWN), 'SendMsg' function iterate every possible labels l for the corresponding link (each link contains multiple messages). For each label,we calculate the minimum cost/energy (Min-Sum BP) out of all possible labels l'. This minimum cost/energy is recorded for that label l of the link. Then use this minimum cost to update the message of the neighbor node so that link A-B and B-A has same message now.

void SendMsg(MRF2D &mrf, int x, int y, DIRECTION direction)

{

TYPE new_msg[LABELS];

int width = mrf.width;

for(int i=0; i < LABELS; i++) {

TYPE min_val = UINT_MAX;

for(int j=0; j < LABELS; j++) {

TYPE p = 0;

p += SmoothnessCost(i,j);

p += mrf.grid[y*width+x].msg[DATA][j];

// Exclude the incoming message direction that we are sending to

if(direction != LEFT) p += mrf.grid[y*width+x].msg[LEFT][j];

if(direction != RIGHT) p += mrf.grid[y*width+x].msg[RIGHT][j];

if(direction != UP) p += mrf.grid[y*width+x].msg[UP][j];

if(direction != DOWN) p += mrf.grid[y*width+x].msg[DOWN][j];

min_val = std::min(min_val, p);

}

new_msg[i] = min_val;

}

for(int i=0; i < LABELS; i++) {

switch(direction) {

case LEFT:

mrf.grid[y*width + x-1].msg[RIGHT][i] = new_msg[i];

break;

case RIGHT:

mrf.grid[y*width + x+1].msg[LEFT][i] = new_msg[i];

break;

case UP:

mrf.grid[(y-1)*width + x].msg[DOWN][i] = new_msg[i];

break;

case DOWN:

mrf.grid[(y+1)*width + x].msg[UP][i] = new_msg[i];

break;

default:

assert(0);

break;

}

}

}

For each BP iteration we propagate the message from Left-Right, Right-Left, Up-Down and Down-Up.void BP(MRF2D &mrf, DIRECTION direction)

{

int width = mrf.width;

int height = mrf.height;

switch(direction) {

case RIGHT:

for(int y=0; y < height; y++) {

for(int x=0; x < width-1; x++) {

SendMsg(mrf, x, y, direction);

}

}

break;

case LEFT:

for(int y=0; y < height; y++) {

for(int x=width-1; x >= 1; x--) {

SendMsg(mrf, x, y, direction);

}

}

break;

case DOWN:

for(int x=0; x < width; x++) {

for(int y=0; y < height-1; y++) {

SendMsg(mrf, x, y, direction);

}

}

break;

case UP:

for(int x=0; x < width; x++) {

for(int y=height-1; y >= 1; y--) {

SendMsg(mrf, x, y, direction);

}

}

break;

case DATA:

assert(0);

break;

}

}

At last, we assign each node (pixel) with the label that minimize the global energy.

TYPE MAP(MRF2D &mrf)

{

// Finds the MAP assignment as well as calculating the energy

// MAP assignment

for(size_t i=0; i < mrf.grid.size(); i++) {

TYPE best = std::numeric_limits::max();

for(int j=0; j < LABELS; j++) {

TYPE cost = 0;

cost += mrf.grid[i].msg[LEFT][j];

cost += mrf.grid[i].msg[RIGHT][j];

cost += mrf.grid[i].msg[UP][j];

cost += mrf.grid[i].msg[DOWN][j];

cost += mrf.grid[i].msg[DATA][j];

if(cost < best) {

best = cost;

mrf.grid[i].best_assignment = j;

}

}

}

int width = mrf.width;

int height = mrf.height;

// Energy

TYPE energy = 0;

for(int y=0; y < mrf.height; y++) {

for(int x=0; x < mrf.width; x++) {

int cur_label = mrf.grid[y*width+x].best_assignment;

// Data cost

energy += mrf.grid[y*width+x].msg[DATA][cur_label];

if(x-1 >= 0) energy += SmoothnessCost(cur_label, mrf.grid[y*width+x-1].best_assignment);

if(x+1 < width) energy += SmoothnessCost(cur_label, mrf.grid[y*width+x+1].best_assignment);

if(y-1 >= 0) energy += SmoothnessCost(cur_label, mrf.grid[(y-1)*width+x].best_assignment);

if(y+1 < height) energy += SmoothnessCost(cur_label, mrf.grid[(y+1)*width+x].best_assignment);

}

}

return energy;

}

Main program which calls above function:

int main()

{

MRF2D mrf;

InitDataCost("tsukuba-imL.png", "tsukuba-imR.png", mrf);

for(int i=0; i < BP_ITERATIONS; i++) {

BP(mrf, RIGHT);

BP(mrf, LEFT);

BP(mrf, UP);

BP(mrf, DOWN);

TYPE energy = MAP(mrf);

}

cv::Mat output = cv::Mat::zeros(mrf.height, mrf.width, CV_8U);

for(int y=LABELS; y < mrf.height-LABELS; y++) {

for(int x=LABELS; x < mrf.width-LABELS; x++) {

// Increase the intensity so we can see it

output.at(y,x) = mrf.grid[y*mrf.width+x].best_assignment * (256/LABELS);

}

}

cv::namedWindow("main", CV_WINDOW_AUTOSIZE);

cv::imshow("main", output);

cv::waitKey(0);

cout << "Saving results to output.png" << endl;

cv::imwrite("output.png", output);

return 0;

}

Tuesday, 22 April 2014

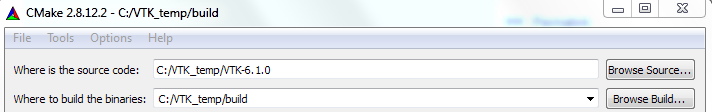

Configure VTK 6.1 + Qt 5.2 under Win7 32bit

This post is mainly focus on how to connect the VTK 6.1 with Qt 5.2.1 (latest one when writing). The main difficulty is configuration and build procedure of the VTK. Here we go:

1. Install Qt 5.2 using the online installer (link). You can build your own version of Qt yourself, but since I don't need a customized Qt, I installed the Qt 5.2.1 for Windows 32-bit (VS 2010, OpenGL) . Please choose the version compatible with your system and compiler.

Note: highly recommend you install Qt under C disk (in Window) as it may or may not make your life easier.

2. Download VTK 6.1 source code (link). Unzip it to 'C:\VTK_temp\' which can be deleted at last after VTK has been installed successfully. Create a build folder within 'C:\VTK_temp\', therefore your directory will be something like this:

C:\VTK_temp\

------VTK-6.1.0\

------build\

3. Run CMake as administrator mode (otherwise CMake can't write to the C).

Next, put the source code path and build path accordingly. Under the directory of the source code, you should find a CMakeLists.txt (double check).

4. First click of Configure button. (Can be long, wait patiently)

In the very first configure, CMake will not configure the Qt as it is not a default option.

After the configure is finished, please check 'VTK_Group_Qt' 'BUILD_EXAMPLES' and 'BUILD_SHARED_LIBS'.

Edit 'CMAKE_INSTALL_PREFIX' to where your VTK will be installed. I use ('C:/Program Files (x86)/VTK')

You may also need to add:

set(CMAKE_LIBRARY_PATH "C:\\Program Files (x86)\\Microsoft SDKs\\Windows\\v7.0A\\Lib")

to the first line of 'C:/Qt/5.2.1/msvc2010_32_opengl/lib/cmake/Qt5Gui/Qt5GuiConfigExtras.cmake'

It directory is different based on your system, the folder should contain 'GlU32.Lib' and 'OpenGL32.Lib'.

If you use 64 bit system, please choose the folder accordingly.

If you don't add the above line, you may get this error:

CMake Error at C:/Qt/5.2.1/msvc2010_32_opengl/lib/cmake/Qt5Gui/Qt5GuiConfigExtras.cmake:16 (message):

Failed to find "glu32" in "" with CMAKE_CXX_LIBRARY_ARCHITECTURE "".

5. Second click of Configure button.

If you get error like the following figure, please select your Qt version to 5 instead of 4 (default):

6. Potential error(s) in CMake

Error:

CMake Error at CMake/vtkModuleAPI.cmake:120 (message):

Requested modules not available:

vtkTestingCore

Solution: check Module_vtkTestingCore

Error:

CMake Error at CMake/vtkModuleAPI.cmake:120 (message):

Requested modules not available:

vtkTestingRendering

Solution: check Module_vtkTestingRendering

Keep press configure after each modification until you get 'configuration done'.

7. Open VTK.sln (admin mode) in the 'C:\VTK_temp\build'.

First, build the project under Release mode, wait for a while (have a rest).

Second, copy 'QVTKWidgetPlugin.lib' and 'QVTKWidgetPlugin.dll' from "C:\VTK_temp\build\bin\Release" and 'C:\VTK_temp\build\lib\Release' to 'C:\Qt\5.2.1\msvc2010_opengl\plugins\designer' (different based on where you installed your Qt).

Third, in VTK.sln, change to Debug mode, build the project again.

Fourth, right click project INSTALL, choose Project Only - Build Only INSTALL. So the VTK will be installed to where you specified the 'CMAKE_INSTALL_PREFIX'.

Fifth, check if the integration of VTK and Qt is successful.

Set the SimpleView project (still in the VTK.sln) as start-up project and then Run the program (press F5)

If the build is successful, we can see something like:

Note, in order to run the example program properly, remember add Qt and VTK's bin directory to the system 'Path'.

If you open the Qt designer.exe, you should see the QVTKWidget as shown:

Thanks to the reference, I could go through the procedure, here they are:

Reference:

1. ITK + VTK + QT on Window 7 64bit and Visual Studio 2010 Pro 32bit project

2. QT5.2.1+VTK6.1 configure (Chinese)

3. VTK 6 + Qt 5

1. Install Qt 5.2 using the online installer (link). You can build your own version of Qt yourself, but since I don't need a customized Qt, I installed the Qt 5.2.1 for Windows 32-bit (VS 2010, OpenGL) . Please choose the version compatible with your system and compiler.

Note: highly recommend you install Qt under C disk (in Window) as it may or may not make your life easier.

2. Download VTK 6.1 source code (link). Unzip it to 'C:\VTK_temp\' which can be deleted at last after VTK has been installed successfully. Create a build folder within 'C:\VTK_temp\', therefore your directory will be something like this:

C:\VTK_temp\

------VTK-6.1.0\

------build\

3. Run CMake as administrator mode (otherwise CMake can't write to the C).

Next, put the source code path and build path accordingly. Under the directory of the source code, you should find a CMakeLists.txt (double check).

4. First click of Configure button. (Can be long, wait patiently)

In the very first configure, CMake will not configure the Qt as it is not a default option.

After the configure is finished, please check 'VTK_Group_Qt' 'BUILD_EXAMPLES' and 'BUILD_SHARED_LIBS'.

Edit 'CMAKE_INSTALL_PREFIX' to where your VTK will be installed. I use ('C:/Program Files (x86)/VTK')

You may also need to add:

set(CMAKE_LIBRARY_PATH "C:\\Program Files (x86)\\Microsoft SDKs\\Windows\\v7.0A\\Lib")

to the first line of 'C:/Qt/5.2.1/msvc2010_32_opengl/lib/cmake/Qt5Gui/Qt5GuiConfigExtras.cmake'

It directory is different based on your system, the folder should contain 'GlU32.Lib' and 'OpenGL32.Lib'.

If you use 64 bit system, please choose the folder accordingly.

If you don't add the above line, you may get this error:

CMake Error at C:/Qt/5.2.1/msvc2010_32_opengl/lib/cmake/Qt5Gui/Qt5GuiConfigExtras.cmake:16 (message):

Failed to find "glu32" in "" with CMAKE_CXX_LIBRARY_ARCHITECTURE "".

5. Second click of Configure button.

If you get error like the following figure, please select your Qt version to 5 instead of 4 (default):

6. Potential error(s) in CMake

Error:

CMake Error at CMake/vtkModuleAPI.cmake:120 (message):

Requested modules not available:

vtkTestingCore

Solution: check Module_vtkTestingCore

Error:

CMake Error at CMake/vtkModuleAPI.cmake:120 (message):

Requested modules not available:

vtkTestingRendering

Solution: check Module_vtkTestingRendering

Keep press configure after each modification until you get 'configuration done'.

7. Open VTK.sln (admin mode) in the 'C:\VTK_temp\build'.

First, build the project under Release mode, wait for a while (have a rest).

Second, copy 'QVTKWidgetPlugin.lib' and 'QVTKWidgetPlugin.dll' from "C:\VTK_temp\build\bin\Release" and 'C:\VTK_temp\build\lib\Release' to 'C:\Qt\5.2.1\msvc2010_opengl\plugins\designer' (different based on where you installed your Qt).

Third, in VTK.sln, change to Debug mode, build the project again.

Fourth, right click project INSTALL, choose Project Only - Build Only INSTALL. So the VTK will be installed to where you specified the 'CMAKE_INSTALL_PREFIX'.

Fifth, check if the integration of VTK and Qt is successful.

Set the SimpleView project (still in the VTK.sln) as start-up project and then Run the program (press F5)

Note, in order to run the example program properly, remember add Qt and VTK's bin directory to the system 'Path'.

If you open the Qt designer.exe, you should see the QVTKWidget as shown:

Thanks to the reference, I could go through the procedure, here they are:

Reference:

1. ITK + VTK + QT on Window 7 64bit and Visual Studio 2010 Pro 32bit project

2. QT5.2.1+VTK6.1 configure (Chinese)

3. VTK 6 + Qt 5

Subscribe to:

Comments (Atom)